DeepSeek: The low-profile Chinese AI startup shaking up the LLM price war

1/70th the cost of GPT-4, on par performance: how did this dark horse rise?

For years, China has been seen as a master of imitation—not of groundbreaking innovation, but of clever copycats and iterations. From apps to algorithms, the country’s reputation for creating knock-offs has dominated global conversations. But there’s a twist. What if China could do more than just replicate?

In recent years, the chip embargo imposed by the US has intensified the difficulty for Chinese companies in breaking boundaries in AI development. But it is under these exact circumstances that a company called DeepSeek has risen out of nowhere—defying all expectations.

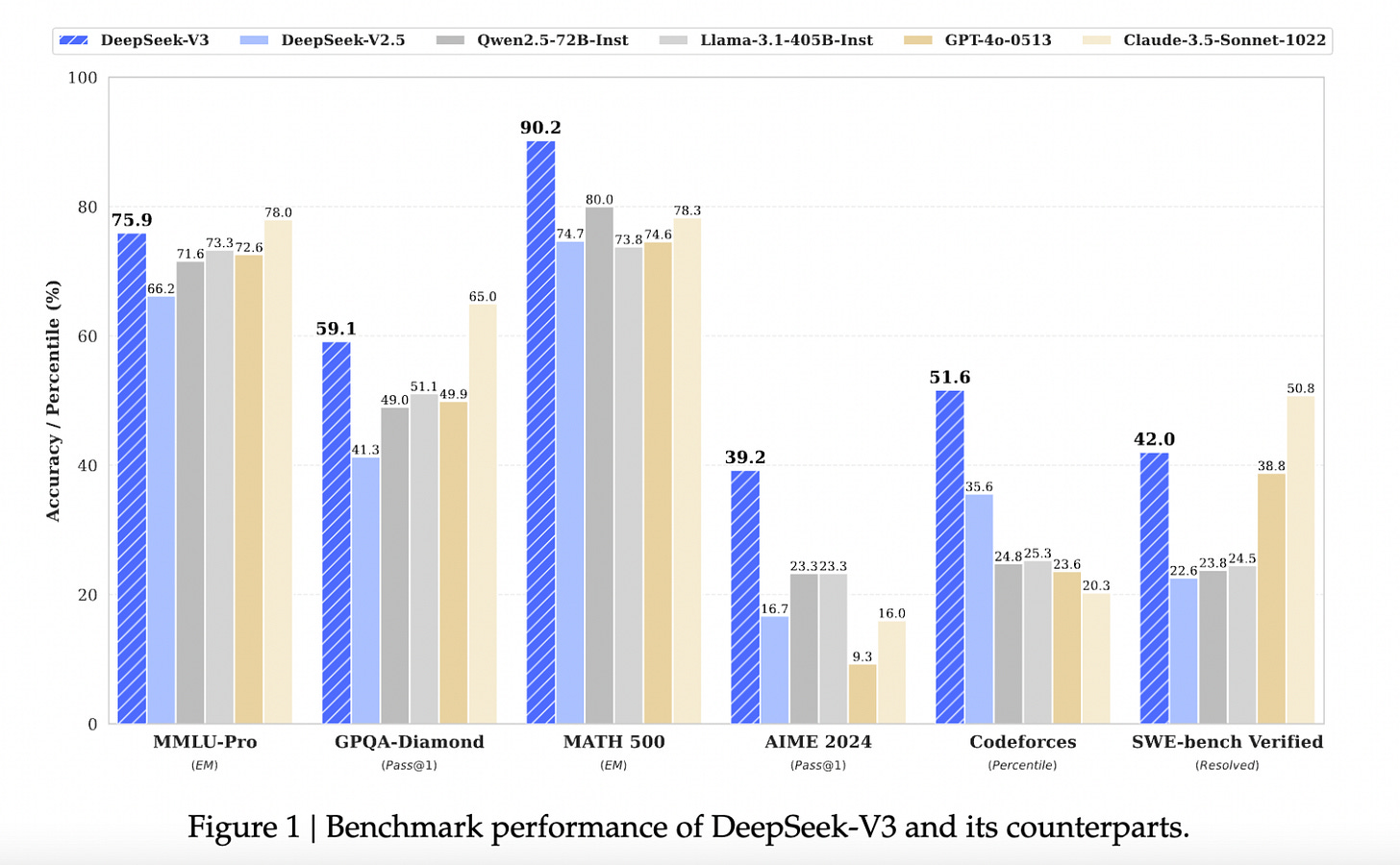

Founded in May 2023 by a team backed by China’s quant hedge fund giant, High-Flyer, DeepSeek’s latest open-sourced LLM, DeepSeek V3, now competes head-to-head with the likes of GPT-4 4o, Llama 3.1, and Claude 3.5. But here’s the kicker: this astonishing progress was made with H800 chips, done within less than two years, and still managed to significantly reduce costs to 1/7 of the Llama 3 70B and 1/70 of the Gpt-4 turbo. The team achieved this by innovating the model’s architecture. In terms of math and coding capabilities, it even outperformed the hottest models currently coming out of Silicon Valley.

The founder, Liang Wenfeng, has a refreshingly candid and almost geeky style, brimming with an unwavering passion for technological innovation. Unlike other major tech companies in China—such as Tencent, Alibaba, and ByteDance—that are also developing LLMs, Liang's team stands out for its sole focus on the model itself, rather than pursuing both the model and applications simultaneously. Despite not prioritizing commercialization or monetization, the team has managed to remain profitable.

No fundraising, no flash—just results. How did this dark horse come to be?

More importantly, while the DeepSeek team has achieved impressive milestones, they’re still not yet the world’s top performer. But the impressive speed of development has raised some important questions for the tech and investing community to reconsider China’s role in the AI race. Does it signal inefficiency and bubbles in the current LLM market? Or could this be the beginning of a new chapter in Chinese innovation—one where it’s not just about copying, but truly creating?

In today’s post, we translate an exclusive interview between Ling Wenfeng, DeepSeek’s founder, and “Waves,” a news outlet by 36Kr (NASDAQ: KRKR). The interview offers insights into not just how the team achieved such impressive results, but also the philosophy behind the company's founding and their vision for China's technological landscape in the future. We will also upload a video comparing DeepSeek with OpenAI's model in the upcoming week on our newest YouTube channel. Stay tuned.

Below is the translation of the original post by the Waves.

DeepSeek Unveiled: A Story of Extreme Technological Idealism from China

Among the seven major AI model startups in China, DeepSeek (深度求索) is the quietest, yet it always manages to make an impression in surprising ways.

A year ago, the surprise came from High-Flyer, the quantitative private equity giant that backed DeepSeek and was the only non-tech giant with a stockpile of over 10,000 A100 chips. A year later, the surprise came from the fact that DeepSeek was the catalyst for China's AI model price war.

In the bombarded AI landscape of May, DeepSeek skyrocketed to fame after the release of its open-source model, DeepSeek V2, which offered an unprecedented cost-performance ratio. The inference cost was reduced to just 1 RMB per million tokens, roughly one-seventh of Llama3 70B and one-seventieth of GPT-4 Turbo.

DeepSeek quickly earned the nickname “the Pinduoduo of AI,” and soon, giants like ByteDance, Tencent, Baidu, and Alibaba followed suit, lowering their prices. This sparked China’s AI price war.

Yet, beneath the smoky battleground, a key fact often gets overlooked: Unlike many tech giants burning money on subsidies, DeepSeek is profitable.

This success stems from DeepSeek’s extensive innovation in model architecture. The company introduced a new MLA (Multi-Head Latent Attention) architecture that reduced memory usage to just 5%-13% of the widely used MHA architecture. Additionally, its proprietary DeepSeekMoESparse structure minimized computation needs to the extreme, ultimately driving down costs.

In Silicon Valley, DeepSeek is dubbed “the mysterious force from the East.” SemiAnalysis’ chief analyst considers the DeepSeek V2 paper “perhaps the best of the year.” Andrew Carr, a former OpenAI employee, called the paper “full of amazing wisdom” and applied its training settings to his own models. Jack Clark, former policy director at OpenAI and co-founder of Anthropic, stated that DeepSeek “employed a group of incredibly talented minds,” and believes that Chinese-made large models “will become as powerful as drones and electric vehicles, impossible to ignore.”

This level of recognition is rare in an AI field dominated by Silicon Valley. Several industry insiders told us that the strong reaction was due to the groundbreaking nature of DeepSeek’s innovation at the architectural level, something that is rare even among global open-source AI models. An AI researcher noted that after the introduction of the Attention architecture, very few people dared to innovate it, let alone validate it on a large scale. "This was something that would be shut down in decision-making because most people lacked confidence."

On the other hand, Chinese AI companies have rarely ventured into architectural innovation, partly due to the prevailing belief that the U.S. excels at pioneering 0-1 technological breakthroughs, while China specializes in 1-100 application innovations. Moreover, the returns on such innovation seem too distant—new-generation models will inevitably emerge in a few months, and Chinese companies can simply follow suit and excel in application. Innovating model structures means charting an unknown path with lots of potential failures, costing both time and money.

DeepSeek is clearly a contrarian. Amidst a chorus of voices that believe model technologies will inevitably converge and that following others is the smarter, faster path, DeepSeek values the lessons learned in the “detours” and believes Chinese model innovators, in addition to applying innovation, can also contribute to the global wave of technological breakthroughs.

Many of DeepSeek’s decisions stand apart from the crowd. To date, it is the only startup among China’s seven major AI companies that has chosen to focus solely on research and technology, rejecting the “both ways” route. It has not pursued to-C applications, nor has it fully embraced commercialization or raised any funds. This has often led to DeepSeek being overlooked by the mainstream, yet on the other hand, it is frequently spread by word of mouth in the AI community.

So, how did DeepSeek come to be? To answer this, we interviewed the reclusive founder, Liang Wenfeng.

Liang, an 80s-born founder who has been behind the scenes since his days at High-Flyer, continues his low-key style at DeepSeek. Like all of his researchers, he spends his days “reading papers, writing code, and participating in group discussions.” Unlike many quantitative fund founders who have experience in overseas hedge funds and backgrounds in physics or mathematics, Liang is a homegrown talent, having studied AI in the Department of Electronic Engineering at Zhejiang University.

Industry insiders and DeepSeek researchers tell us that Liang is an exceptionally rare figure in China’s AI scene: someone who combines strong infrastructure engineering skills with expertise in model research and the ability to marshal resources. “He can make precise top-level judgments and is stronger than frontline researchers in the details,” they said. “His learning ability is terrifying,” while he “doesn’t come across as a boss, but rather as a geek.”

This is a rare interview. In it, this technological idealist offers a voice that is particularly scarce in China’s tech community: he is one of the few who prioritize “principles over profits” and urges us to recognize the inertia of the times (baiguan: which refers to the prevailing belief that China was historically better at manufacturing rather than original innovation), pushing for "original innovation" to take center stage.

A year ago, when DeepSeek first launched, we interviewed Liang Wenfeng in “The Madness of High-Flyer: The Path of a Hidden AI Giant’s Large Model.” If that quote from a year ago, “We must embrace ambition with madness, and also embrace sincerity with madness,” still sounded like a beautiful slogan, it has now become a call to action.

Below is the transcription of the interview, with journalists from the "Waves" interviewing Liang Wenfeng, the founder of DeepSeek.

Part 1: How did the first shot of the price war get fired?

"Waves": After the release of DeepSeek V2, the model quickly sparked a fierce price war in the large model sector. Some say you are the "catfish" of the industry.

Liang Wenfeng: We didn’t aim to be a catfish; we just accidentally became one.

"Waves": Was this outcome unexpected?

Liang Wenfeng: Very unexpected. We didn’t realize how sensitive people were to price. We were just going at our own pace and calculated the cost for pricing. Our principle is not to burn money, but not to earn outrageous profits either. The price is just slightly above cost with some margin.

"Waves": Five days later, Zhipu AI followed suit, and then ByteDance, Alibaba, Baidu, Tencent, and other giants also lowered their prices.

Liang Wenfeng: Zhipu AI lowered the price for an entry-level product, but the models at our level are still quite expensive. ByteDance was the real first mover, lowering their flagship model to the same price as ours, which triggered other large companies to follow. Their model costs are much higher than ours, so we didn’t expect anyone to do it at a loss. It ended up becoming a money-burning subsidy game like in the internet era.

"Waves": From an external perspective, it seems like a battle for users, much like the price wars in the Internet age.

Liang Wenfeng: The goal isn’t primarily to fight for users. We lowered our prices because in exploring the next-generation model structure, our costs dropped. Plus, we believe that both APIs and AI should be accessible and affordable for everyone.

"Waves": Before this, most Chinese companies would directly copy the Llama structure to create applications. Why did you choose to innovate at the model structure level?

Liang Wenfeng: If the goal was just to create an application, then using the Llama structure and quickly rolling out products would make sense. But our destination is AGI, which means we need to research new model structures to build more powerful models within limited resources. This is foundational research necessary to scale up to larger models. Besides model structure, we've also done a lot of research on data construction and how to make models more human-like, which is reflected in the models we've released. Moreover, Llama's structure, in terms of training efficiency and inference cost, is already two generations behind international advanced standards.

"Waves": Where does this generation gap come from?

Liang Wenfeng: First, there’s a gap in training efficiency. We estimate that the best domestic models are about one generation behind the best international ones in model structure and training dynamics. Just this alone means we need to consume twice the computing power to achieve the same results. On data efficiency, we estimate another one-generation gap, meaning we need to consume twice the training data and compute it to achieve the same results. Combined, that’s four times the compute power. Our goal is to keep narrowing this gap.

"Waves": Why do most Chinese companies aim for both models and applications, while DeepSeek has chosen to focus solely on research and exploration?

Liang Wenfeng: We think it’s more important to participate in the global wave of innovation right now. For many years, Chinese companies have been accustomed to others doing technological innovation and then monetizing the application. But that’s not a given. Our starting point in this wave isn’t to make a quick profit, but to reach the forefront of technology and drive the development of the entire ecosystem.

"Waves": The prevailing mindset from the internet and mobile internet era is that the U.S. is good at technological innovation, while China is better at application.

Liang Wenfeng: We believe that as the economy grows, China should gradually become a contributor, not just a free rider. Over the past 30 years in the IT wave, we haven’t truly participated in technological innovation. We’ve become accustomed to the arrival of Moore's Law from the heavens, with better hardware and software coming out every 18 months while we idle at home. The Scaling Law is being treated in the same way.

But in reality, this is the result of generations of relentless innovation in the Western-dominated tech community. The reason we haven't participated in this process before is that we overlooked its existence.

Part 2: The real gap isn't one or two years, but the difference between originality and imitation.

"Waves": DeepSeek V2 has surprised many people in Silicon Valley. Why?

Liang Wenfeng: Among the countless innovations happening in the U.S. every day, this is quite ordinary. They are surprised because it's a Chinese company contributing as an innovator in their game. After all, most Chinese companies are used to following, not innovating.

"Waves": But in the Chinese context, making such a choice is also quite a luxury. Large models are a capital-intensive game, and not all companies can afford to research innovation without first considering commercialization.

Liang Wenfeng: The cost of innovation is definitely not low, and the past habit of "borrowing" also has a lot to do with our previous national conditions. But now, look at China's economic scale, or the profits of large companies like ByteDance and Tencent, which are not low on a global scale. What we lack in innovation is definitely not capital, but confidence and not knowing how to organize high-density talent to achieve effective innovation.

"Waves": Why do Chinese companies, including large ones that are not lacking in money, easily prioritize rapid commercialization?

Liang Wenfeng: For the past thirty years, we’ve only emphasized making money and neglected innovation. Innovation is not entirely driven by business needs; it also requires curiosity and a desire to create. We’ve been shackled by the inertia of the past, but that is also a phase.

"Waves": But you are ultimately a business organization, not a public research institution. You choose innovation and share it through open source; where is your moat then? For example, the MLA architecture innovation in May will probably soon be copied by others.

Liang Wenfeng: In the face of disruptive technology, the moat created by closed source is short-lived. Even if OpenAI closed its source, it wouldn't stop others from catching up. So, we are embedding value in our team. Our colleagues grow in this process, accumulate a lot of know-how, and build an innovative organization and culture. That’s our moat.

Open-sourcing, publishing papers — we don’t lose anything. For tech people, being followed is a real sense of achievement. Open-sourcing is more of a cultural behavior than a business one. Giving away is actually an additional honor. A company doing this also creates cultural appeal.

"Waves": What do you think of market belief perspectives like Zhu Xiaohu’s?

Liang Wenfeng: Zhu Xiaohu is internally consistent, but his approach is more suited to companies that focus on making quick profits. Look at the most profitable companies in the U.S.; they are all high-tech companies that accumulate power over time.

"Waves": But for large models, purely technological leadership is hard to form an absolute advantage. What is the bigger thing you’re betting on?

Liang Wenfeng: What we see is that Chinese AI cannot always be in the follower position. We often say there’s a one- or two-year gap between Chinese and U.S. AI, but the real gap is the difference between originality and imitation. If this doesn't change, China will always be a follower. So, some explorations are inevitable.

"Waves": NVIDIA's leadership isn't just the result of one company’s effort, but the joint efforts of the entire Western tech community and industry. They can foresee the next generation of technology trends and have roadmaps in hand. China’s AI development also needs such an ecosystem. Many domestic chip companies fail to develop because of the lack of a supporting tech community. China inevitably needs people at the cutting edge of technology.

Part 3: More investment does not necessarily lead to more innovation

"Waves": DeepSeek now has an idealistic vibe similar to early OpenAI, and it’s open source. Will you switch to closed source later? OpenAI and Mistral have both gone through the process from open to closed.

Liang Wenfeng: We won’t close the source. We believe that having a strong tech ecosystem first is more important.

"Waves": Do you have any funding plans? There have been media reports that High-Flyer plans to spin off DeepSeek for independent listing. AI startups in Silicon Valley ultimately end up tied to big companies.

Liang Wenfeng: There’s no funding plan in the short term. The issue we face has never been money, but rather the embargo on high-end chips.

"Waves": Many believe that AGI and quantitative models are completely different things. Quantitative models can be done quietly, but AGI may require high-profile efforts and alliances to increase your investment.

Liang Wenfeng: More investment doesn't necessarily lead to more innovation. Otherwise, big companies would have already monopolized all innovation.

"Waves": Are you not doing applications because you don't have a gene in operations?

Liang Wenfeng: We believe that the current stage is the time for a technological innovation explosion, not an application explosion. In the long run, we hope to form an ecosystem where the industry directly uses our technology and products. We only handle basic models and cutting-edge innovation, and other companies can build to-B and to-C businesses based on DeepSeek. If we can create a complete industrial ecosystem, there’s no need for us to do applications ourselves. Of course, if necessary, we can do applications, but research and technological innovation will always be our top priority.

"Waves": In terms of choosing API services, why choose DeepSeek over big companies?

Liang Wenfeng: The future world will likely be one of the specialized divisions of labor. Basic large models require continuous innovation, and big companies have their boundaries and may not be the best fit.

"Waves": But can technology really make a difference? You’ve also said that there is no absolute technical secret.

Liang Wenfeng: There are no technical secrets, but resetting takes time and costs. NVIDIA’s graphics cards, theoretically, have no technical secrets and are easy to copy, but reorganizing a team and catching up with the next generation of technology takes time. So, the actual moat is still quite wide.

"Waves": After you lowered prices, ByteDance quickly followed, indicating they felt some kind of threat. How do you view the new ways of competition between startups and big companies?

Liang Wenfeng: Honestly, we don’t care much about this. We just happened to do it. Providing cloud services is not our main goal. Our goal is still to achieve AGI.

We haven’t seen any new solutions yet, but big companies don’t have a clear advantage either. They have existing users, but their cash flow businesses are also their burden and will make them vulnerable to disruption.

"Waves": What do you think the future holds for the other six AI startup companies working on large models?

Liang Wenfeng: Perhaps 2 or 3 of them will survive. Right now, they’re all in the burning money phase, so those with clear positioning and the ability to operate more finely will have a better chance of surviving. Other companies may undergo radical transformations. Valuable things won’t disappear, but will be reborn in a new form.

"Waves": In the High-Flyer era, the competitive attitude was often described as 'doing things its own way,' rarely paying attention to peer comparisons. What is your foundational thinking about competition?

Liang Wenfeng: I often think about whether something can increase the efficiency of society's operation and whether you can find a position in its industrial chain where you excel. As long as the end result increases social efficiency, it’s worth it. Many things in between are temporary stages, and overly focusing on them will make things dizzying.

Part 4: A group of young people doing "mysterious and profound" things

"Waves": Jack Clark, the former policy chief at OpenAI and co-founder of Anthropic, believes DeepSeek has hired a group of 'deeply mysterious geniuses.' What kind of people were behind the creation of DeepSeek V2?

Liang Wenfeng: There are no deeply mysterious geniuses. They are mainly fresh graduates from top universities, fourth- or fifth-year PhD interns, and a few people who graduated just a few years ago.

"Waves": Many large model companies are obsessed with recruiting from overseas, and many believe the top 50 talents in this field aren’t in Chinese companies. Where do your people come from?

Liang Wenfeng: The V2 model has no overseas returnees. They are all local. The top 50 talents may not be in China, but perhaps we can create such talents ourselves.

"Waves": How did the MLA innovation happen? I heard the idea came from a young researcher’s personal interest.

Liang Wenfeng: After summarizing some mainstream changes in the Attention architecture, he had a sudden idea to design an alternative. But it took a long time to bring the idea to life. We formed a team and spent several months getting it to work.

"Waves": This kind of divergent inspiration seems to be related to your completely innovative organizational structure. During your time at High-Flyer, you rarely assigned goals or tasks from the top down. But with AGI, a field full of uncertainties, have there been more management actions?

Liang Wenfeng: DeepSeek is also entirely bottom-up. We generally don’t pre-assign tasks, but rather rely on natural division of labor. Everyone has their unique growth experience and comes with their own ideas, so we don’t need to push them. During the exploration process, if they encounter problems, they naturally pull in others for discussion. But when an idea shows potential, we will allocate resources from the top down.

"Waves": I hear that DeepSeek is very flexible with managing chips and people.

Liang Wenfeng: We have no limits when it comes to allocating chips or people. If someone has an idea, they can use the training cluster without approval. Also, since there are no departments or layers, we can easily call on anyone, as long as they’re interested.

"Waves": A loose management style also depends on the fact that you’ve selected a group of people driven by strong passion. I’ve heard that you’re great at hiring based on details, allowing people who might not excel according to traditional evaluation metrics to be chosen.

Liang Wenfeng: Our hiring standard has always been passion and curiosity, so many people have some unique experiences, which is really interesting. Many people’s desire to do research far exceeds their concern for money.

"Waves": Transformer was born in Google’s AI Lab, and ChatGPT was born at OpenAI. What do you think are the differences in the value of innovation produced by AI Labs in big companies compared to a startup?

Liang Wenfeng: Whether it's Google’s lab, OpenAI, or even the AI Labs of big Chinese companies, they all add value. In the end, it was OpenAI that created it, and there was some historical coincidence in that.

"Waves": Is innovation largely a matter of chance? I noticed that in your office, the meeting rooms in the middle row have doors on both sides that can be easily pushed open. Your colleagues said that this is to leave room for the unexpected. There’s even a story that when Transformer was being developed, someone happened to overhear and joined in, eventually helping to turn it into a universal framework.

Liang Wenfeng: I think innovation is first and foremost a matter of belief. Why does Silicon Valley have such an innovative spirit? First, because they dare to try. When ChatGPT came out, the entire domestic industry lacked confidence in doing cutting-edge innovation. From investors to big companies, everyone felt the gap was too large and suggested sticking to applications. But innovation requires confidence first. This kind of confidence is usually more apparent in young people.

"Waves": But you don’t participate in fundraising, and you rarely speak publicly. In terms of social presence, you’re certainly not as visible as the companies that are actively raising funds. How do you ensure that DeepSeek is the first choice for people working on large models?

Liang Wenfeng: Because we’re doing the hardest thing. The biggest attraction for top talent is to solve the world’s toughest problems. Actually, top talent in China is often underestimated. There is too little hardcore innovation at the societal level, which means they don’t get the opportunity to be recognized. We’re working on the hardest problems, and that’s what attracts them.

"Waves": Recently, OpenAI’s release didn’t bring GPT-5 as many expected. A lot of people think the technology curve is clearly slowing down, and many have started questioning the Scaling Law. What’s your take on that?

Liang Wenfeng: We are more optimistic. The entire industry seems to be developing as expected. OpenAI isn’t a god; they can’t always stay ahead.

"Waves": How long do you think it will take for AGI to be achieved? Before the release of DeepSeek V2, you had released models for code generation and mathematics, and you switched from dense models to MOE. So, what’s your AGI roadmap look like?

Liang Wenfeng: It could be 2 years, 5 years, or 10 years—anyway, it will happen in our lifetime. As for the roadmap, even internally in our company, there isn’t a unified opinion. But we are definitely focusing on three directions. The first is mathematics and code, the second is multimodality, and the third is natural language itself. Mathematics and code are a natural testing ground for AGI, somewhat like Go—it’s a closed, verifiable system, and it’s possible to achieve high intelligence through self-learning. On the other hand, multimodality and learning in the real world are also necessary for AGI. We remain open to all possibilities.

"Waves": What do you think the endgame of large models looks like?

Liang Wenfeng: There will be companies dedicated to providing foundational models and basic services, with a long chain of specialization. More people will work above that to meet the diverse needs of society.

Part 5: All playbooks to success are products of the previous generation

"Waves": Over the past year, there have been many changes in China’s large model startup scene. For example, Wang Huiwen, who was very active at the beginning of last year, exited in the middle, and the companies that joined later started showing differentiation.

Liang Wenfeng: Wang Huiwen took on all the losses himself and allowed others to walk away unscathed. He made a choice that was the least beneficial to himself but the best for everyone. He’s a very decent person, and I really admire him for that.

"Waves": Where do you focus most of your energy now?

Liang Wenfeng: Most of my energy is focused on researching the next generation of large models. There are still many unsolved problems.

"Waves": Other large model startups are trying to have it all. After all, technology doesn’t guarantee permanent leadership, and capturing the time window to translate technological advantages into products is also important. Does DeepSeek focus on model research because the model’s capabilities are still not enough?

Liang Wenfeng: All the playbooks to success are products of the previous generation, and they may not hold in the future. Discussing future AI profit models using the business logic of the internet is like discussing General Electric and Coca-Cola when Ma Huateng (CEO of Tencent) started his company. It’s likely to be a case of "carving the boat to search for the sword." (Baiguan: it's a Chinese idiom that means "looking for a solution based on outdated methods or assumptions, even when the circumstances have changed", similar to "barking up the wrong tree")

"Waves": In the past, High-Flyer had a strong technical and innovation gene, and its growth was relatively smooth. Is this why you are more optimistic?

Liang Wenfeng: High-Flyer, to some extent, strengthened our confidence in technology-driven innovation, but it hasn’t always been smooth sailing. We’ve gone through a long accumulation process. What the outside world sees is High-Flyer after 2015, but in reality, we’ve been at it for 16 years.

"Waves": Returning to the topic of original innovation. Now that the economy is entering a downturn and capital is in a cold cycle, will this bring more suppression to original innovation?

Liang Wenfeng: I don’t think so, actually. The adjustment of China’s industrial structure will rely more on hardcore technological innovation. When many people realize that the easy money of the past likely came from good timing, they’ll be more willing to dive into real innovation.

"Waves": So, you’re optimistic about this?

Liang Wenfeng: I grew up in a small town in Guangdong in the 1980s. My father was an elementary school teacher, and in the 1990s, there were many opportunities to make money in Guangdong. Back then, a lot of parents would come to our house, and they generally thought that studying wasn’t useful. But now, looking back, the mindset has changed. Because it’s harder to make money now, there may not even be opportunities to drive a taxi anymore. A generation’s time has passed.

In the future, there will be more and more hardcore innovation. Right now, it might not be easy to understand because the entire society needs to be educated by facts. When society starts recognizing the achievements of people who focus on hardcore innovation, collective thinking will change. We just need more facts and a process to get there.

'Giving away' requires a uniquely human facility called 'empathy'. I applaud Liang Wenfeng when he says:

"Giving away is actually an additional honour. A company doing this also creates cultural appeal."

There should be a lot more of empathy and giving away, cultivating a spirit of generosity that will create a 'virtuous circle'. a feedback mechanism. Liang Wenfeng is obviously a very decent person, and in my experience that is fairly common in China, amongst the more hospitable people on the planet.