After DeepSeek, what next?

How Tanka, founded with cutting-edge research from brain sciences, is pioneering the next frontier of AI memory on the road to AGI

In recent years, the story of Chinese companies going global has shifted. It’s no longer just about traditional sectors like infrastructure and manufacturing. We’re now seeing a surge of startups—ranging from small, nimble teams building apps and e-commerce stores to tech-driven ventures pushing for game-changing products and technologies—expanding beyond China’s borders. Some are based in China but have focused on global markets since Day 1, while others are founded by Chinese talents operating entirely overseas. This diversity reflects the dynamism of the Chinese entrepreneurial ecosystem and its growing influence on the global stage.

At Baiguan, we have discussed Chinese entrepreneurs in Mexico or e-commerce logistics beyond Shein and Temu. Now, we’re launching a new series to dig into this trend, showcasing the stories of Chinese-founded or Chinese-connected startups making a global impact. We want to spotlight some of the best companies with interesting ideas that are on the rise and share the stories of those who could be the next big players in the global market.

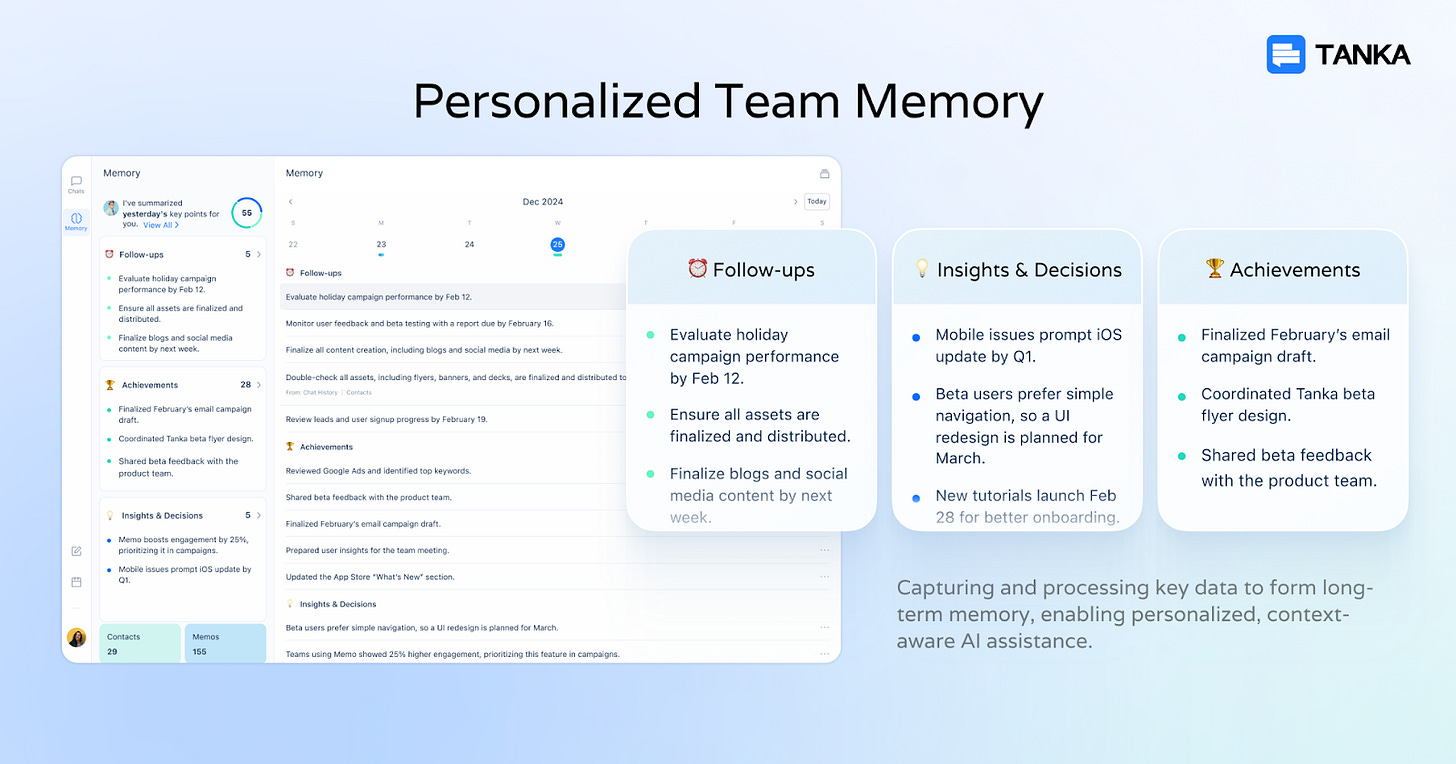

In this edition, we chat with Tanka AI, an AI startup that’s changing the way businesses use technology by blending breakthroughs in neuroscience with AI. Their core product feature, which integrates AI long-term memory into business communication platforms, is helping teams collaborate more effectively and streamline workflows across different industries.

Tanka is AI led by CEO Kisson Lin who is a serial entrepreneur. Tanka is also backed by Shanda Group, which was founded by legendary entrepreneur Mr. Chen Tianqiao. After selling the highly successful video game and online literature businesses more than a decade ago, Mr. Chen devoted himself to understanding human consciousness by founding the Tianqiao & Chrissy Chen Institute (TCCI), "a billion-dollar commitment to help advance brain science – Brain Discovery, Brain Treatment, and Brain Augmentation", according to TCCI's website. Tanka AI represents Shanda's latest effort to combine cutting-edge brain science with AI technology.

Now, let’s dive into our conversation with Tanka’s CEO Kisson Lin and Chief AI Scientist Dr. Lidong Bing to learn more about their groundbreaking technology, their vision for the future of AI, and how they’re positioning themselves in the competitive global market. Our topics cover the importance of AI long-term memory, how Tanka draws inspiration from cutting-edge brain science research, how “memory” is factored into humanity’s path towards AGI, and how success stories such as DeepSeek inspire a small team like Tanka to have the ambition to tackle truly foundational research.

Table of Contents

Interview with Tanka's CEO Kisson Lin and chief scientist Dr. Lidong Bing:

Tanka is built by a group of entrepreneurial minds who wanted to resolve the technical challenges of AI memory

Amber@Baiguan: Tanka’s product that combines business communication with AI long-term memory seems like a very fascinating concept. Can we start by hearing a bit about the story behind the team and how this product came to be?

Kisson Lin@Tanka:

Tanka is a business messaging tool with AI long-term memory. We’re actually the first in the world to do this.

Why did we come up with this idea? The founding team has a lot of experience in startups. Many of us have worked in large companies and startups before, and we’ve all experienced the pain of using multiple messaging tools. Especially when working with cross-national teams, we’d use Slack, WhatsApp, email, WeChat, among others. It often felt like information was scattered all over the place. I remember once we missed a crucial investor meeting just because of this.

The issue we’re solving is that most knowledge workers spend two to three hours every day sending emails and messages. About 70% to 80% of this information is work-related, but it’s scattered across various messaging tools, documents, project management tools, and more. A lot of this information is not recorded anywhere. Most of the time, it’s just stored in our heads. You have to rely on people’s memories and search through multiple tools to find the chat history. This is something we often waste a lot of time doing.

So, we always had this big pain point. But for a long time, there wasn’t a tool on the market that could solve it. Many people thought creating a business messaging tool like Slack was too heavy. So we waited for a long time.

Then, with the rise of GPT and other AI models, we thought, "Why not just build it ourselves?" We approached it from first principles, thinking about the problem like building a rocket from scratch and breaking it down to figure out what problems a messaging tool should solve. Then, with the AI capabilities we now have, and our unique insights into memory mechanisms gained from cutting-edge brain science research, we could create a minimum viable product that addresses the pain points we’ve experienced.

Building a “second brain” for businesses

Amber@Baiguan: Fascinating. Why is Tanka a messaging tool? What are your core ideas behind the product design?

Kisson Lin@Tanka:

To tackle this pain point, the first thing we had to do was gather all the scattered information. Once collected, we can use AI to process the information, extract the memory, and make it useful. Not all information is valuable—like someone saying "thank you" or talking about what to have for lunch—but we need to extract the most valuable insights. The challenge is finding that needle in the haystack, since what’s valuable varies by company, team, or individual. Once we extract the important information, we need to retrieve it and apply it to daily work scenarios. That’s the solution we wanted to build.

So why is Tanka a messaging tool? The reason is that most communication and decision-making happens in two main ways: through messaging apps like IM, and through meetings—whether online, like Zoom, or offline. IM has become a critical container of conversations, decisions, and to-dos from various resources, so we thought, let’s build a messaging tool that also integrates other tools like email, documents, and project management tools.

We wanted to create a platform where all these different tools could feed into one place, and then our AI could work with that information. The AI would handle tasks like memory extraction, storage, and retrieval, as well as application. The key difference is the memory. What makes it really valuable for users is that our AI system stores team memories, evolving and growing with the team. You can think of it as the team’s “second brain”.

Right now, no tool really does that—no tool serves as a team’s “second brain”. Many companies are working on creating personal assistants or second brains for individuals, but not for teams. That’s where we’re different.

For knowledge workers, having this "second brain" is crucial. Over time, we hope this second brain can grow into an intelligent decision-making advisor, reminding the team about previous decisions and their principles and providing insights that help make more accurate choices. It will also ensure that the team’s collective memory is preserved, which improves long-term team effectiveness.

AI long-term memory: the collective memory defines the DNA of an organization

Amber@Baiguan: I love the concept of the second brain—it’s really cool! But it also sounds like a huge challenge. This leads me to my next question. In the AI field, we’re seeing a lot of focus on large language models. What’s the difference between that and your approach? Does it mean Tanka is just storing more massive amounts of data? What’s the difference between AI long-term memory, and, say, just a very long context window?

Kisson Lin@Tanka:

The key point is that memory matters. While AI models have made a lot of progress, having memory takes it to another level. Memory is the foundation of self-consciousness and personalization. Memory defines who you are, and for a team, the collective memory defines the DNA of the organization. If a team is product-driven or tech-driven, it’s reflected in the decisions they’ve made, and memory helps preserve that.

An AI without memory is just a tool, but with memory, it becomes a far more intelligent digital worker. It becomes a companion in your work and life. Even big players like OpenAI ChatGPT, Google Gemini, Perplexity, and Anthropic Claude are all recognizing the importance of memory for providing more personalized AI services.

Now, let me explain the difference between long context windows and building a complex memory system. A long context window helps AI understand more data, but it still has limitations. If you extend the context window, the response can lose precision. Also, you can't really extend it indefinitely. More often than not, it’s not as simple as feeding a whole chapter or book to the system. The text needs to be structured with chapters, indexes, and causal relationships to tell the story effectively.

How does the human brain store memories then? When the brain sees new information, it tries to connect it to the knowledge already stored in its structure—this is what we call “connecting the dots.” When we store information that way, we remember it better.

Moreover, for humans, every person has a purpose, and so does every company. So, each piece of information that comes in must connect with that purpose so we understand the causal relationship. That’s how you create a more structured and useful memory.

Another commonly used approach is to build an external vectorized database, and we call it RAG (retrieval-augmented generation). But when you retrieve data from that, it’s static and raw—it’s just data with no implications or abstraction of hierarchies, and it doesn’t connect the dots. It doesn't evolve or link together, especially when you have conflicting information. For example, a project might be called "Project Alpha," and later it gets renamed to "Project Beta." If someone asks about "Project Alpha," a raw database will only pull up info about the old name. It won’t tell you the full story of how the project evolved. So, the benefit of memory is that it’s contextual, it evolves, and it connects the dots.

Last but not least, the human brain has something called "abstract memory". It's about linking different data points together to tell a high-level, abstract story. For instance, let's say I'm an entrepreneur and I like spicy food—these are two separate data points. But if we put them together, could they give birth to a new concept? Maybe I’m a risk-taker who enjoys new experiences. So, for example, the next time you recommend a restaurant to me, instead of just recommending a spicy place, you might suggest a restaurant with a unique experience. I think this is what AI long-term memory is all about—it's more human-like and more action-oriented.

The big bet on AI x Memory, founded with a passion for memory and brain science

Amber@Baiguan: It’s super cool. I think what I’m getting from this is that the big difference between memory and data is that memory isn't just a pile of random things. It’s decision-driven and purpose-driven. This is actually really important for businesses making decisions.

I’m curious—how did Tanka decide to bet on AI memory? It seems like such a foundational element of the entire Tanka technology. What led to that decision? Was there any prior research or broader industry discussions that influenced your choice to merge these two fields of “AI” and “memory”?

I also know you have other entrepreneurial experiences related to memory. Could you share a bit about your personal story and how it connects to what you’re doing now?

Kisson Lin@Tanka:

Well, industry-wide, around 2022 to 2023, people were focusing on large language models. Then, when GPT-4 came out, people realized that these models were super smart already. They know world knowledge and can calculate really fast, yet they don’t have a memory of you or your company. AI models have strong generative abilities but lack continuity and contextual grounding. So, every time you interact with them, you have to give them a prompt, and it doesn't feel personalized.

That’s when people started realizing that memory is crucial. And as users spent more time with AI products and accumulated more complex data, they began to expect more. Tanka actually started thinking about memory even earlier, because when the LLMs first came out, we realized they were going to become commoditized—the “scaling law” does scale fast. Of course, DeepSeek proved that there are other ways to optimize it, but it also proves that the LLMs will become ever-more commoditized.

In my opinion, memory to AI is like data to the internet. In the internet era, companies that had vast amounts of data were the most valuable. That’s why we started focusing on memory earlier. Shanda Group, the company that backs Tanka, has also done a lot of research in neuroscience and memory for many years. That’s also why we have a lot of synergies. They are the sponsor of Tianqiao and Chrissy Chen Institute (TCCI) which sponsors foundational neuroscience research at places like Caltech, and have a solid track record in neuroscience research. There is also the Shanda AI Research Institute (SARI), where Dr. Bing came from. They have solid research on the cross-section of neuroscience, brain science, and AI. They support us, provide resources, and offer access to researchers. This gives us a competitive edge for the core AI memory technology at Tanka.

As for me, I’ve been focusing on memory for quite a while. One reason is that I got into AI early. Back in 2018, I was still working in the U.S. in charge of new products and R&D strategy at Meta, which launched many new AI-related initiatives at the time, which made me start to pay attention and realize AI was going to be a big trend.

In 2022, after working in China for two years, I co-founded Mindverse AI. We started the company in early 2022, and by the end of that year, we launched our product MindOS, an agent operating system and marketplace. We were 1 year ahead of ChatGPT’s store. The platform allowed users to build their own agents—like tutors or email assistants—by uploading the agent’s workflow, goals, and memory.

But after a few months of launching, we realized that it didn’t really matter how complex or good your agent’s workflow was. Many people didn’t know how to use it. What people wanted was a single agent that really understood them well. It had to be highly personalized, and that agent could later collaborate with other agents for tasks like teamwork. But the key point is that the agent had to be easy to use, even for someone who didn’t understand AI, and it had to deeply understand the user–this is when memory comes into the picture.

When we think about how we remember a friend, we don’t recall a list of bullet points detailing every characteristic. Instead, it’s first a more abstract memory, a feeling or impression that we then break down to understand how it was formed. That’s how memory works—it’s structured, hierarchical, and has emphasis.

For AI to truly understand a user, it needs to have the same structure: it needs focus, structure, and the ability to constantly update. So, in early 2023, we began studying memory mechanisms, and that led us to work on a product called "Me.Bot" a personal second brain. This led to my exploration of long-term memory for AI.

But during this process, we realized that around 70-80% of information is generated in a work environment. The challenge was organizing the work-related memory, which involves collective vs. individual memory—two very different things. That’s when I realized that the pain point we were facing was broader, and I wanted to address memory for organizations. That’s how I started working on AI long-term memory for business organizations here at Tanka.

Amber@Baiguan: That's really cool. I think a lot of people will look up to your journey. It sounds like it’s been a continuous process of exploration.

Kisson Lin@Tanka: Thank you. Actually, my interest in memory started quite a while ago. My personal hobby is writing sci-fi, and the stories I write often revolve around memory. So, it’s a topic I’m really passionate about—brain science, memory, and artificial intelligence.

Amber@Baiguan: Can we read some of your sci-fi work?

Kisson Lin@Tanka: It’s still in draft form. I can send it to you for feedback :)

Tanka's “aha moment”: How brain science inspired Tanka’s architecture

Amber@Baiguan: Now, I want to move on to understand a bit more about Tanka’s core technology. How does Tanka’s AI memory architecture actually work? Do you have any scientific theories that have inspired your team’s approach? Was there an “aha” moment?

Kisson Lin@Tanka

There’s actually quite a bit, and Dr. Bing could add more details. But let me start with some general ideas. For example, how does the human brain prioritize long-term memories versus short-term ones? Or how does the brain’s mechanism for “forgetting” work? And how do we recall and connect contexts? These are all things we’ve taken inspiration from the brain science.

You mentioned the "aha" moment, and there was indeed one for us. There is this “Thousand Brains Theory”, populated by Jeff Hawkins’ book A Thousand Brains. Traditionally, we’ve considered the human brain a linear structure, like a database. Information comes in from different sources, gets processed in a central unit, and then that unit forms a cognitive model of the world, which becomes our memory.

But the Thousand Brains Theory, though still not fully proven, proposes that intelligence is highly distributed across thousands of cortical columns in the neocortex. Each column independently builds its own model of the world based on sensory input, and rather than relying on a strict hierarchy, these columns collaborate dynamically to reach a consensus. This decentralized model means that the brain is not dependent on a singular high-level processing center; instead, intelligence is emergent from the interplay of multiple cortical columns working in parallel. This shift in perspective is groundbreaking because it challenges the traditional top-down approach to cognition and instead presents the brain as a network of independent yet cooperative learning units, leading to greater robustness, adaptability, and resilience in both biological and artificial intelligence systems

Tanka’s Multi-Agent Memory System draws inspiration from this idea. Instead of relying on a single long-term memory store, Tanka’s memory system functions like a distributed network, where AI learns from multiple knowledge representations and integrates them over time. This approach mimics how human brains process information in different contexts, ensuring that AI remembers more flexibly and adapts more efficiently.

Moreover, this theory also touches on a key issue we’ve been grappling with: the difference between collective memory and individual memory, and how we can approach both. For instance, there are many challenges when we talk about collective memory. A product manager might interpret information differently from someone in marketing, leading to different memories of the same event.

Even more, different people remember things differently, influenced by their own biases. So, is memory shaped top-down, like when a boss decides what people should remember? Or is it bottom-up?

And then, how do we structure all this complexity? So, when we move from individual memory to collective memory, the complexity skyrockets. But with this new architecture we’re developing, we believe we’ve found a way to approach it.

We realized that we could use the same concept for collective memory—just like the columns in the brain working together. The distributed memory across these columns forms a consensus that results in collective memory. This gave us a real "aha" moment for designing collective memory architecture.

There are many more inspirations from brain science as well. There is a difference between long-term and short-term memory. Just like your brain retains what matters, Tanka filters out noise and saves only critical insights, decisions, and key information.

There is “contextual recall”, meaning that You don’t remember facts in isolation—you recall them in context. Likewise, Tanka connects past conversations to new ones, surfacing relevant insights when needed.

There is “associative thinking”, meaning that, while humans connect dots across past experiences. Tanka links related messages, emails, and documents to provide a full picture of any topic.

It is also important to forget in the right way. Our brains forget unimportant details. Tanka does this too—prioritizing what’s useful while keeping historical knowledge retrievable on demand.

Amber@Baiguan: Fascinating! What use cases do you see for this in the real world? Can you share some examples? Some of our audience might not be familiar with this technical concept.

Kisson Lin@Tanka:

Sure, let’s talk about how we apply it at Tanka. There are very cool things you can do with Tanka. For example, Tanka can summarize everything you’ve done over the past quarter to write a performance review or weekly report for you. Or if you need to retrieve details about a company acquisition decision—who was involved, what did each of them say—it’s hard to pull that together without an AI memory system but Tanka can do it for you easily.

So, with Tanka’s memory system, it’s much easier to handle information that’s more complex or has a lot of details from a long time ago. It’s particularly useful when the data density is high, or when the logic is complex.

But my personal favorite feature is our “smart reply”. For example, if an investor sends an email asking for an update on your company's revenue for the last quarter, nowadays, most companies would have to go into their Slack or Notion to check the financial report, then export the data and send it back to the investor. But with Tanka AI, since we’ve collected memories from different sources, we can directly “smart reply” with the data. Of course, you have full control over it and can edit the email. However, drafting with the right information is already a significant boost in productivity. So, this is one feature I personally really love.

Robert@Baiguan: This “request for company financials” example sounds very useful, for us. Quarterly updates for shareholders are always time-consuming!

Alpha users are loving it

Amber@Baiguan: Are there other businesses or companies that have already started using this? Any interesting feedback?

Kisson Lin@Tanka:

We actually launched the alpha version of Tanka at the end of September last year, and it was a small-scale launch. Up until a few days ago, we were still waitlist-only. But we've already accumulated thousands of clients on the waitlist.

We were really cautious about giving access because we thought the product wasn’t quite ready yet, so it was more of a phase where we were experimenting and exploring together. But even with that, we’ve opened to about 20+ companies, and they have been using it for a few months now. They've generated 35,000 AI-powered smart replies—that is equivalent to saving over 100 working days of unproductive communication.

One of the clients is a university research lab with around 90 people. That department has pretty much given up using WhatsApp and Slack.

Amber@Baiguan: That’s really impressive. We hope we can also get on Tanka soon.

Kisson Lin@Tanka: Tanka welcomes you.

Robert@Baiguan: We actually have a feature request for ourselves. So we operate a Discord community for our paying subscribers. Often, because there are too many messages, it’s hard to digest if we haven’t read all the messages for a while. A Tanka plugin could be super helpful in this case.

Kisson Lin@Tanka: Yes, we are adding support for Discord in our product development pipeline.

Robert@Baiguan: That’s great!

Tanka's core technology explained

Amber@Baiguan: Great! Now, I want to move on to some more technical aspects. With this core technology, could you, Dr. Bing, explain a bit more about it from a technical perspective?

Dr. Lidong Bing@Tanka:

As Kisson mentioned, we've already discussed some high-level technical thinking. This architecture diagram is for our AI-driven intelligent decision system. We can use this as an illustration. I will explain how it works with Tanka’s actual use cases of long-term memory like the smart reply.

Let’s take a high-level look at the three main components of our intelligent decision system.

At the bottom here, we have long-term memory. As we’ve discussed before, this is an important part. On top of this long-term memory, we have the causal inference engine on the left and the multi-function calling execution module on the right. These three components form a loop. Let’s dive into the details of these modules.

The "long-term memory" concerns a “subject”—the individual or organization it serves. As Kisson mentioned earlier, to make AI more useful, it must have a comprehensive, accurate, and up-to-date understanding of the entity it serves. So, we build the long-term memory around that subject.

This memory contains three main types of data:

Raw data – For example, daily communication records within a company, like chat logs, which are raw data. It can also include external content like blogs or technical papers. We tag these raw data using large models or manual annotations to form long-term memory.

And we have a basic unit for long-term memory, and we call it a “memory unit”. We organize these units into a "memory graph" because memories are interconnected. For example, a project might have follow-up steps, like bug fixing after stress testing, and these activities are linked together.

Another type of long-term memory is domain ontology or a company’s metadata, which includes the company’s architecture, organizational structure, policies, and internal documents.

The third type is historical execution data, say the user feedback on a smart reply. Historical execution data will also be internalized into part of the long-term memory for further memory optimization.

So, with the help of long-term memory, for a specific subject, we can answer queries like, "Summarize my work over the past month." This question will be sent to the causal inference engine (on the top left of the diagram). Summarization is a simple task, but we can also handle more complex queries.

For a complex question, the causal inference engine plays a more significant role. It breaks down a question into several sub-questions and figures out the relationship between these sub-questions. When answering each sub-question, we must retrieve and reason through long-term memory. After reasoning, we form a decision—this can be thought of as a reply strategy that Tanka’s feature, like the smart reply, uses.

For example, when creating a work summary, it may be necessary to retrieve memory units from the past month, which is the first step. The second step would be to categorize the memory into several modules based on the type of work. Then, for each module, it figures out what progress has been made during this month. Finally, a strategy for generating the answer is formed. Ultimately, this strategy is executed in the multi-function calling module, which then generates a complete summary and returns it to the user.

Then, during execution, we will send feedback to the long-term memory—for example, whether the user has edited the work summary we generated. Or we simply gave them an option for a "thumbs-up" or "thumbs-down" and see whether the user uses them. This feedback on the execution results will also help us optimize how we build our long-term memory.

So this is the overall architecture of the intelligent decision-making system we are developing at the Shanda AI Research Institute, explained with the actual use case in Tanka.

The challenge of model evaluation: In business, there’s often no definitive right or wrong

Amber@Baiguan: This is very clear. I have a follow-up question. It sounds like evaluating the accuracy of this model is challenging. In a business context, there might not always be a definitive answer. For example, different people might have different opinions on what's right. How does your decision model handle this uncertainty? How do you evaluate whether the AI is making the right decisions or deciding what should be remembered or forgotten?

Dr. Lidong Bing@Tanka:

It’s a good question. First, when we extract memory, like from chat logs, we structure it into categories. For example, we use structured formats like "5W1H" (Who, What, Where, When, Why, How). Another format is the “daily summary”, which includes specific categories like follow-up actions and achievements. So, raw data will have specific structures after the processing.

Once the memory is structured, we track its use—for example, how often these memories are hit by queries, and how satisfied the user is with the results we generated for them. For instance, We can judge by looking at whether it’s been edited by users or not. Because when we're using smart reply, we first need to confirm the information with the user: the AI-generated reply is in a text input box where the user can edit it. If the user has edited an AI-generated response, it implies that the memory associated with that response may be outdated or inaccurate. This feedback will help us refresh that memory and optimize how the long-term memory should be used

Amber@Baiguan: That’s a pretty cool mechanism. This ties back to what Kisson was discussing earlier about how Tanka continuously learns from the user's feedback and evolves over time to fit seamlessly with an organization’s needs.

Robert@Baiguan: Hearing about this just makes me realize that if you guys manage to build this product at scale, you will have a strong business moat because you are essentially storing and constantly updating the memory of an organization, in a way that’s relevant for this organization. User stickiness can be incredible if you can manage to pull it off.

Alibaba’s DAMO Academy

Amber@Baiguan: Dr. Bing, I know you previously worked at Alibaba’s DAMO Academy, where there were many fascinating research projects. I’m curious—has your experience there inspired you, and do you think there’s anything you’ve brought from that to your work at Tanka?

Dr. Lidong Bing@Tanka:

Yeah, I think every job change is a two-way process. On one hand, I ask myself, "What value can I bring to this new company?" On the other hand, I also wonder, "What does this new company offer that attracts me?"

From the first perspective, in my six years at Damo Academy, we worked on many projects and research. I was also responsible for mentoring many PhD students. We also worked on many of Alibaba's international e-commerce projects, such as Lazada, and we undertook large-scale projects like Southeast Asian language models and the world’s first Visual Language Model capable of simultaneously understanding both visual and audio modalities.

The experience I gained from these projects will undoubtedly help me in this new team.

And I think there are two important things I brought from Damo Academy to Tanka. First, the choice of technology. The strategic choice of the technology plays a huge role in determining the outcome of a project. For example, at Tanka, how we define, extract and structure memory units is key. Then, once we’ve defined the memory, we need to think about the data flow pipeline for extraction. For example, we need to detect topics in group chats, but since topics often shift and overlap, careful analysis of the data is required to prepare clean inputs for extracting memory units. Finally, we need to organize the extracted memory sources in a way that facilitates their better application later. After joining the new team, I see that many of the technical decisions are directly related to the projects I worked on previously at Alibaba’s Damo Academy.

Another important point is that, beyond the broader technical choice, it’s all about the details. For example, is our prompt definition precise enough? Prompt engineering is a complex problem on its own. For instance, when referring to the same element in a prompt, are the mentions consistent? Such details may influence the final inference of the large model. Though the impact might be hard to quantify, these small details matter. As a manager, I must ensure that the team pays attention to these details and maintains a meticulous attitude in their work.

Tanka x AGI

Amber@Baiguan: I have to ask a bigger question here: AGI is a topic that every entrepreneur in the industry is concerned with. It seems like what Tanka is working on is similar—combining AI with memory to make sense of things. What’s your take on this? From your years of experience, how do you see AGI’s future in this regard?

Dr. Lidong Bing@Tanka:

Well, I think my answer to the previous question ties into this. Joining a new organization has a lot to do with my views on AGI. I was attracted to Shanda AI Research Institute because of its focus on long-term memory and many ongoing products that integrate AI long-term memory, like Tanka. This aligns with my own view of AGI’s future.

As Kisson mentioned earlier, LLMs are becoming more powerful, but to build a truly intelligent system, it’s not just about having a large language model. For example, when we look at human intelligence, it has two components: one is reasoning ability, which corresponds to the pre-frontal cortex, and the other is memory, which corresponds to the hippocampus. These two need to work together to form real intelligence. For example, reasoning ability can tell you that you should call the person who gave birth to you "mom." However, if your hippocampus does not work properly and cannot tell you who gave birth to you, you still won’t know who your mom is. If we look back at the history of AI, it’s essentially trying to mimic human intelligence. In this context, the LLMs today primarily serve as the pre-frontal cortex, which is the reasoning function.

But LLMs can’t access all knowledge—especially private knowledge like internal company data, chats, or personal documents. That’s why, to build a complete intelligent system, we need a personalized memory system that is organization-oriented or individual-oriented. This memory needs to be long-term and continuously updated. Only when this memory system is built and integrated with an LLM can it truly serve specific individuals and organizations effectively.

Therefore, in my view, achieving AGI in the future will require the simultaneous development of both LLMs and long-term memory systems.

Small team, foundational research, big ambition

Amber@Baiguan: This makes me very curious because integrating AI with long-term memory sounds like a very ambitious and foundational research goal. I know Tanka’s team is not very big. What kind of confidence or thought process is behind your ambition to achieve such a goal? I’d love to hear your thoughts.

Kisson Lin@Tanka:

Well, for a startup, we are a pretty sizable team with a few dozen people, which is quite large. But like you said, for such an ambition, indeed, it seems like we are a very small team.

However, I believe that in the AI era, it's more about the passion and talent of the team rather than the size and whether we are applying first-principle thinking to solve problems. Whether it's Elon Musk and his many companies, or DeepSeek, which proved a miracle recently, what they proved is that the key is whether we are using more innovative methods, a first-principle approach, to think about a problem and disruptively explore other paths to find better solutions. I think this is a huge inspiration for us: the size of the team isn't the most important factor, what matters is our determination and our capacity for innovation—this is what really matters. Then, the scale will continue to grow as the flywheel keeps turning if we are on the right track.

Also, I think the AI era has enabled small teams, and in the future, even single-person companies are possible, because AI can already help us with many tasks.

Lastly, I think our partnership with Shanda is a tremendous asset. As I mentioned earlier, the Shanda AI Research Institute, where Dr. Bing is based, has a strong focus on fundamental AI research and areas like brain science. With Shanda’s solid support, I’m confident in our long-term competitive edge in AI research.

Amber@Baiguan: Very well, I can see your passion. It's very contagious. So, to wrap up, could you share with us any upcoming features we should be excited about?

Kisson Lin@Tanka

Yes, there are so many. Basically, every week, we release new small features. Personally, I think the thing I'm most looking forward to is that we’ve now integrated with many communication tools and you can do smart replies. But in the next stage, we’re going to integrate with more tools like Notion, Google Docs, Jira, etc. AI will be able to generate documents, manage projects, edit documents, and more. So, this is what’s coming in the next phase. AI will do even more tasks.

Secondly, we will combine AI with search and introduce a more native AI search experience.

And thirdly, as Dr. Bing just mentioned with the architecture diagram, we’ll continue exploring ways to enhance our model’s capabilities in multi-function calls and causal inference systems. In the future, AI will play a key role in decision-making. We aim to combine technology research with product development, enabling our AI to not only assist with writing replies, summarizing, and querying information but also to locate relevant memories and support intelligent decision-making.

I am really looking forward to all of these things!

Amber@Baiguan: That sounds very exciting. I’m looking forward to it. Our team is already trying out Tanka.

Kisson Lin@Tanka: Great. Let us know how we can be more helpful to your team.

If you’re interested in talking to the Tanka team, you may contact them via: marketing@tanka.ai